I started learning about distributed file system. It is based on Google File Systems. It is targeted for data intensive applications. It is scalable and fault tolerant. It is targeted to run on cheap commodity computers which are bound to fail either the whole machine crashes or its disk experience intermittent failures. Also when we are connecting large set of computers, some computers may gets disconnected due to network failures. So in normal file systems failure is just exception cases, but in Google file system and Hadoop it is a norm. That is these file systems are designed with failure as certainties.

Obviously your question would be what is the usual number of computers present within this distributed environment? Hmmm I guess numbers in 1000s. These can be present in same data centers or it might be present in different data centers all together.

Next question would be what are we going to process in these system of computers? If we are having this much computers should be super complex computations like rocket science, weather forecast, etc. Well you can use for that as well but even simple problems need big set of machines. Let me give you an example. You have a simple text file containing some text, let us assume, its size is some 16kb. Now you write a C program to count the words occurrence in that file. How much time it takes to execute it? Well hardly seconds. Now let me give you a file with size 16 MB, still it will complete in seconds. Now if I increase it to 16 GB file, well it might drag to minutes. What if it is 16 TB? now it might run for hours before completing. How nice it would be if I can some how use parallelism in counting the words. With our normal C program also you can do that, but overhead of synchronization will lot of time to program and also with synchronization comes the deadlocks and other monsters. And what kind of synchronization are you really looking here - thread or process. Both within same machine.

Now enters Hadoop. Hadoop provides this distributed environment of handling huge files which helps you to concentrate on the logic of the program rather than on worrying about how to make the program work. Hadoop combined with Map Reduce will help to write a simple program[ or programs] to do the word count on files which are of really huge files. Let me remind you, word counting is a simple program, suppose you want to implement a web crawler or natural language processing algorithm or some complex image processing algorithms. That time you will really utilize Hadoops distributed environment. Best thing is they are saying take a bunch of normal linux cheap machines combine them and you get a beast out of it.

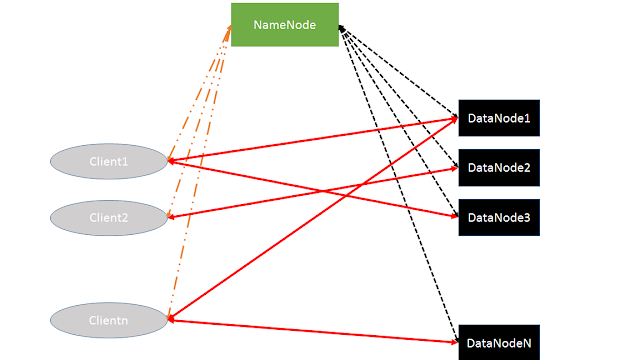

Hadoop is a client server model. There is a Master [namenode], a set of sub masters [datanode] and then there are clients. There is a lot of theory why we need to go to this architecture and what benefits we are gaining by using this architecture. Also why each component has to handle responsibilities in a particular way? I would recommend to read Google File System paper to understand it. In a future post, I will go through GFS.

In Hadoop, given a file, that file is divided into fixed sized blocks. Usually it will be 64 MB blocks. So client will ask the namenode to add the file to HDFS(hadoop file system). Namenode will divide the file into many blocks and ask the datanodes to store those blocks in them. Now each block will be copied/replicated to 3 different datanodes. This is one of the way of making it more reliable. So a single file might be spread across multiple datanodes. The number of replication can be configured.

So namenode will track the files namespace and also file mapping that is File to blocks mapping. Then where exactly those blocks exists? that is in which data nodes does the block exists. All this information is kept in memory. Now obviously if namenode fails everything is lost right? And what was the need to keep in memory? Well it is fast. To avoid loosing the data there is a method. There is a base image of hadoop - Fsimage and then there is log file EditLog. Each time a modification happens to the file system like addition of new file, deletion of files, changes to files an entry will be made in the EditLog. So incase of namenode failures, when namenode is restarting, it will use the FsImage as base and start the file system, then it will read the EditLog and starts making changes to that filesystem. Once everything is updated, it will write those changes to FsImage and truncates the EditLog so that fresh changes can be written to the EditLog.

So the datanodes actually has data. Each of these blocks are stored in the form of local files. So to do any read or write to the files blocks client will ask the namenode to whom should it contact. Namenode will give the list of datanodes. Client will then contact the datanodes to do actual data transfer. By doing this we are reducing the network traffic to namenode. Namenode keeps track of datanodes by means of heartbeats. That is every now and then, datanodes will send a message to namenode giving the report like how many blocks it has, if there is any disk failures, etc. Suppose namenode did not receive a message from datanode for a while, namenode assumes that datanode has crashed and starts replicating the data present in that datanode to another datanode.

I would recommend to go through this link http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs/HdfsDesign.html#Introduction to know more about the architecture of hadoop.

Now you might think I need atleast 3 machines - a client machine, a namenode macine and a datanode machine. But we can configure a single machine which has both datanode and namenode. And we can run our client applications run on the same machine.

Well trying to install hadoop in a single machine. Will update the screenshot once done. Currently facing some problems.

In future, will go through Map-Reduce, Google File System, Project Voldemort and Amazons Dynamo.